Kestrel, the Department of Energy’s newest supercomputer, has taken flight. The impressive machine would have never left the nest without Hewlett Packard Enterprise, the prime contractor responsible for bringing it to life.

DOE tapped HPE in late 2021 to build the new platform to tackle ongoing renewable energy and energy-efficiency research. Kestrel will deliver more than five times faster performance, with 44 petaflops of computing power.

A petaflop is 1,000 trillion (or 1 quadrillion) floating-point operations per second; that’s mind-melting speed in human terms. A person would have to perform one calculation per second for 31,688,765 years to match what a 1-petaflop computer system does in 1 second, according to Indiana University IT Services.

Kestrel’s computing power will advance research in computational materials, continuum mechanics, and large-scale simulation and planning for future energy systems by applying new innovations in artificial intelligence and machine learning, according to the National Renewable Energy Laboratory’s project announcement.

DISCOVER: HPE offers a modern cloud experience for managing data.

NREL’s partnership with HPE is longstanding. The company built both the Eagle and Peregrine supercomputers, predecessors to Kestrel.

“There are continual technical challenges that drive what we need to do,” says Trish Damkroger, senior vice president and chief product officer of high-performance computing, AI and Labs at HPE.

How to Cool Kestrel Off

Damkroger has long experience with supercomputing, having held key posts at Sandia and Lawrence Livermore national labs as well as Intel. Some of the biggest challenges include generating the power required to operate a supercomputer and dealing with the heat created by the machines.

“We’ve gone to liquid cooling,” Damkroger says. “You don’t need any chillers or fans, and we’re even looking at warm-water cooling.”

Click here to learn more about optimizing your cloud connection.

Kestrel uses HPE Cray EX supercomputers. The system is made of individual compute “blades,” which carry all the components that make the supercomputer go: central processing units, fabric connections, printed circuit boards, and cooling and power components. There are also blades that hold HPE’s Slingshot switching elements. Cooling is built into each blade.

Liquid cooling loops running through the compute infrastructure cool the cabinets and components. A cooling distribution unit cools the liquid itself and removes heat from the system via a heat exchanger with data center water. The incoming water can be as warm as , which means chilling isn’t necessary and less electricity is required.

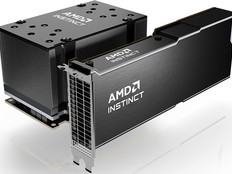

HPE manufactured the boards and blades using chips from Intel, NVIDIA and AMD. The onboard communications infrastructure and cooling system are proprietary.

“A lot of that stuff is unique to HPE,” Damkroger says.

MORE FROM FEDTECH: The HPE Aruba AP22 maximizes device bandwidth in the office.

Preparing for Kestrel’s Second Phase: GPUs

Kestrel will have 2,436 compute nodes available for high-performance computing tasks.

Phase two will begin in December with the installation of 132 graphics processing unit nodes, each with four NVIDIA H100 GPUs. Originally created for video rendering in computer games and simulators, GPUs have revolutionized supercomputing.

A CPU does serial tasks at very high speed. At best, it can handle a handful of operations at once. By contrast, a GPU uses parallel processing to do multiple calculations simultaneously and can handle thousands of operations in an instant.

“There’s been a huge push toward using GPUs,” Damkroger says. “They’re easy to program, so you can go faster.”

AI applications require massive number crunching. It turns out GPUs are uniquely suited to the task, as vast quantities of data are run through neural networks to train AI applications such as driverless cars and ChatGPT.

The downside: GPUs use a huge amount of electricity. That’s one reason it’s unlikely you’ll hear your AI overlord banging on your front door anytime soon. A human brain imagining a nice day at the beach costs nothing. Meanwhile, a supercomputer can gobble up to 30 megawatts of power per year — by some estimates, the same amount used by a small city.

DIVE DEEPER: Why agencies should be part of the AI proof-of-concept process.

“Kestrel represents new capabilities so that we can do better science, faster,” says Aaron Andersen, Kestrel engineering lead at NREL, in a video. “There's an awful lot of research questions and problems that just need more capability or need the GPU capability to make considerable progress.”

Kestrel is one of a new breed of machines that allows for far deeper research than previously possible.

“You can do much more with a larger compute size,” Damkroger says. “You can get to a small enough geometry to put an individual cloud in atmospheric modeling. You can fit more into a computer to see the interactions between things.”

Brought to you by:

afcb.jpg?itok=hR4PgXpc)

.png)